This won’t take long…

curl -sfL https://get.gpustack.ai | sh -s -

Invoke-Expression (Invoke-WebRequest -Uri "https://get.gpustack.ai" -UseBasicParsing).Content

For detailed installation, refer to the docs

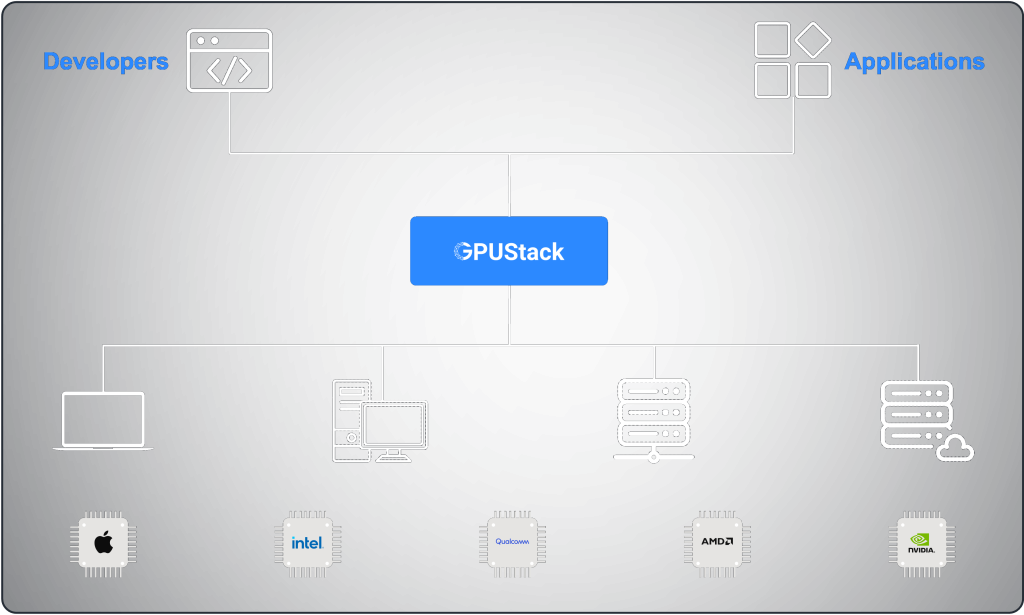

Seamlessly adaptable from desktop to server, across all major OS and GPU hardware.

One-click deployment with a fully integrated technical stack, built for Enterprise needs.

100% open-source, fully on-premise – complete control over your data and access in your environment.

Develop and test your AI applications with models on your local Mac or Windows desktop, then seamlessly transition to production models on Linux GPU servers. GPUStack supports all platforms and provides a consistent experience.

With support for vLLM, llama.cpp, and more, GPUStack is built for cross-platform compatibility and performance. Easily extend and upgrade your inference engines and models to meet evolving needs.

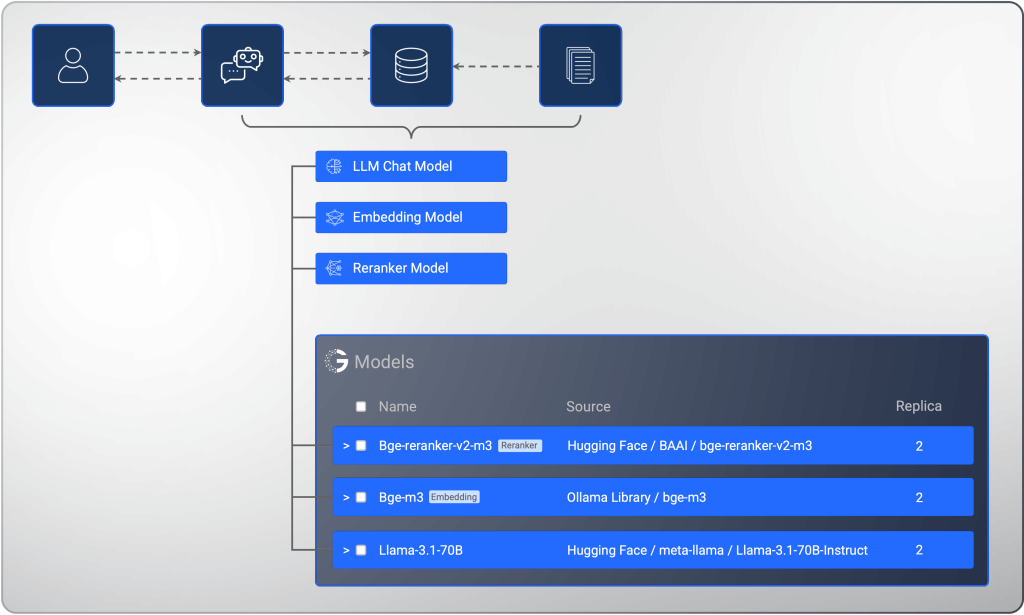

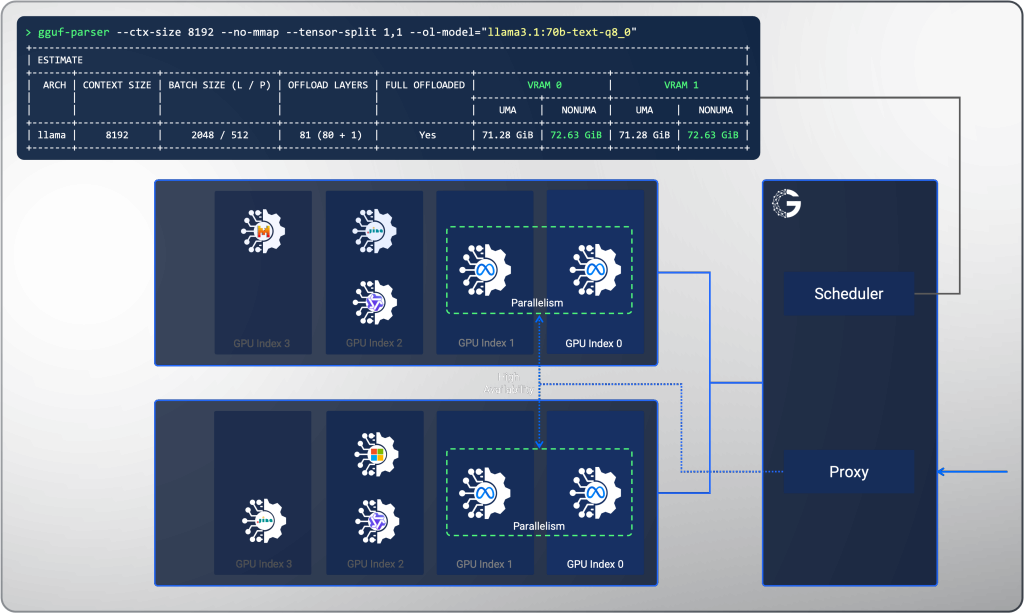

GPUStack ensures maximum GPU utilization and high availability with flexible scheduling strategies, automated resource calculation, and support for multi-model replicas with automatic load-balancing.

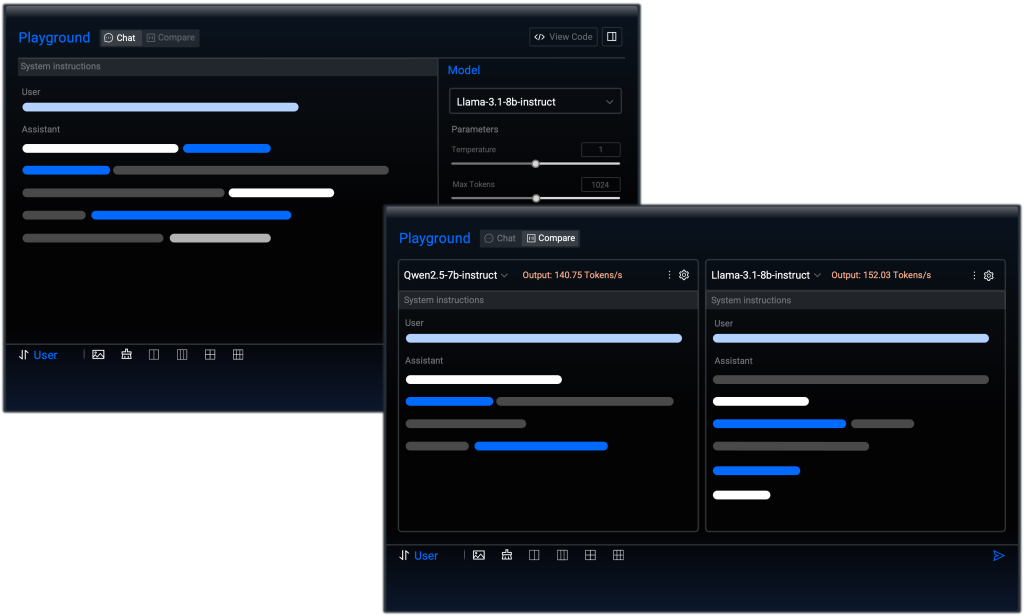

The Playground enables fast iteration and testing with tools like prompt tests, parameter configuration, multi-model comparison, and code examples.

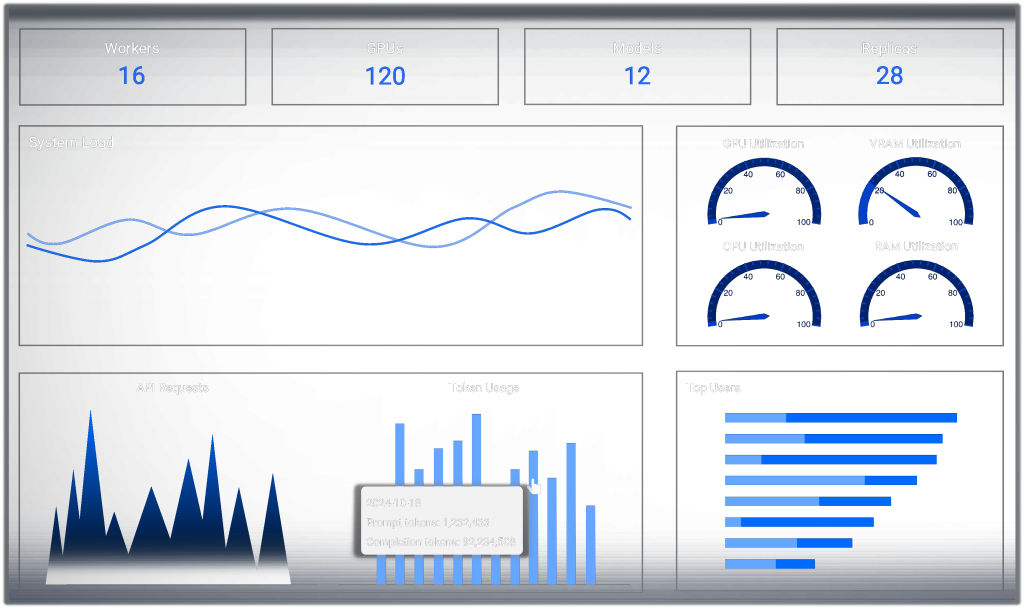

The Dashboard provides real-time insights into system performance, resource usage, and API access statistics. Track token usage, top users, and model status to ensure optimal operations and efficiency.