How Walrus Simplifies Infrastructure Management with Terraform

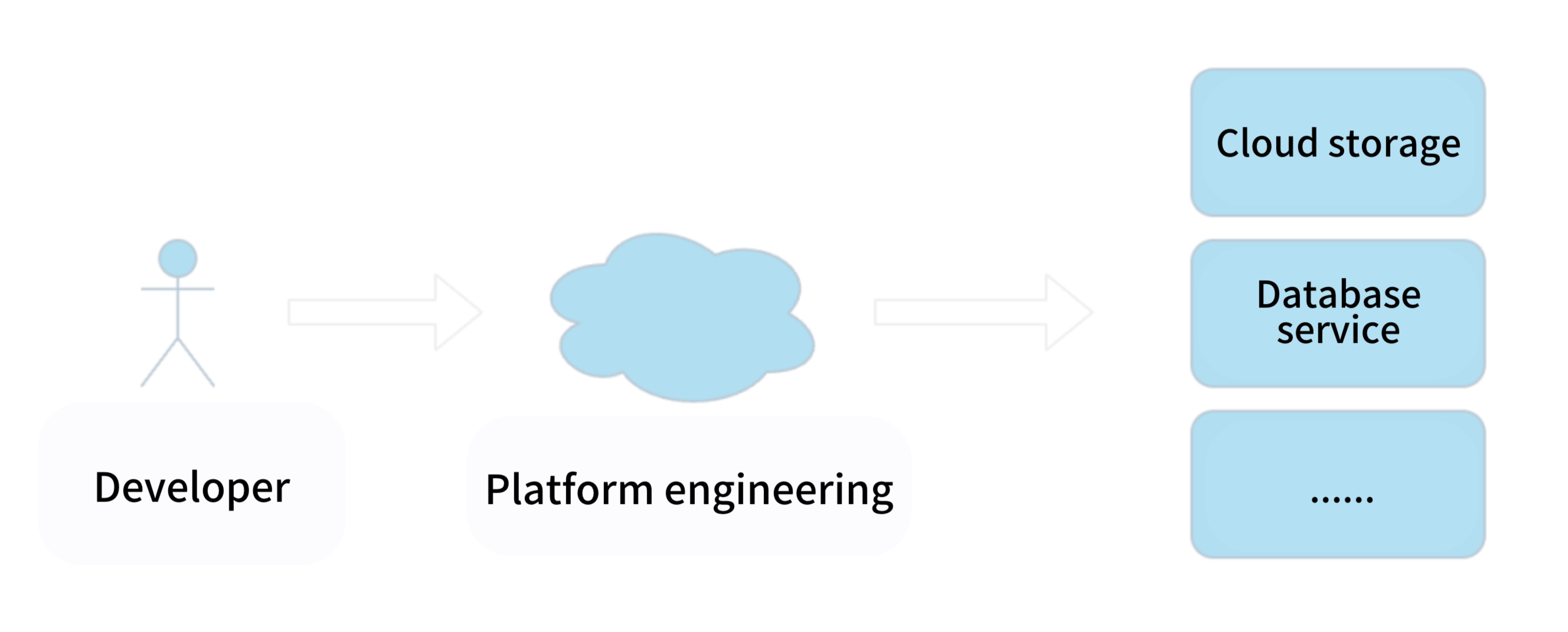

Platform Engineering has gained significant attention in recent years as a key concept in the world of technology. It revolves around a software engineering methodology that seeks to boost developer productivity by simplifying and minimizing the uncertainties linked with contemporary software deployment. An exemplary embodiment of Platform Engineering is the Internal Development Platform (IDP).

In this article, we will amalgamate the principles of Platform Engineering to introduce the fundamental ideas and applications of Terraform, while also delving into the hurdles encountered in its utilization and how Walrus offers solutions to these challenges.

What is an Internal Development Platform (IDP)?

An Internal Development Platform (IDP), in essence, serves as a self-service layer strategically layered atop an organization's existing technology tools, primarily catering to engineering teams. Crafted and curated by the platform team itself, the IDP empowers developers to effortlessly configure, deploy, and initiate application infrastructure, all without relying on the operations team.

Essentially, the IDP is a vital cog in the wheel of automation for operational workflows, significantly enhancing work efficiency through simplified application configuration and streamlined infrastructure management.

In contrast to traditional infrastructure deployment and management methods characterized by manual configurations, IDP ushers in a paradigm shift. Traditionally, administrators were burdened with the onerous task of manually handling infrastructure components like servers, network devices, and storage. They had to painstakingly install and configure software while grappling with diverse dependencies and dynamic environmental changes. This approach was inherently time-consuming, demanded considerable effort, and remained susceptible to configuration errors and inconsistencies.

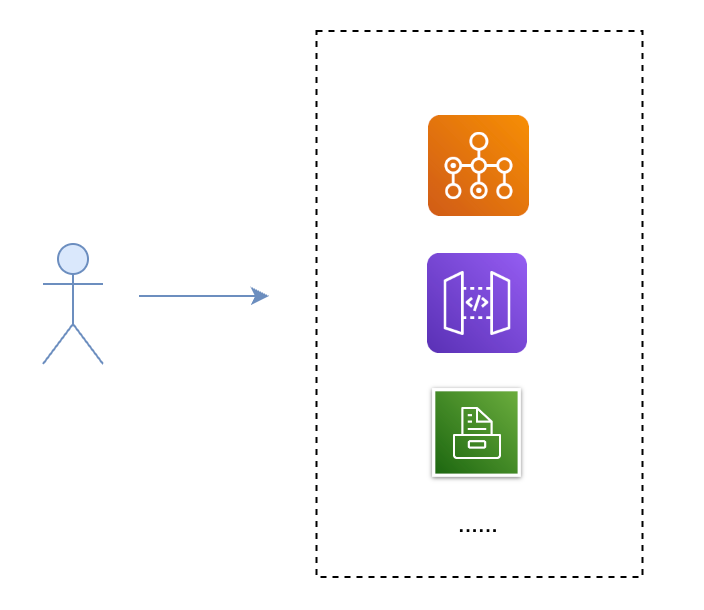

One of the central objectives of Platform Engineering revolves around simplifying infrastructure utilization for developers. For the majority of developers, delving into the intricate nuances and underlying service creation methods is typically beyond their purview. Consider, for instance, the realm of storage services; developers may require their functionality but are often uninterested in the intricacies of their creation. Concepts like object storage and disk arrays typically fall outside the scope of developers' concerns.

In most business scenarios, developers are primarily concerned with how to employ these services effectively and whether they can reliably store data in designated locations. This is where Platform Engineering shines by seamlessly offering storage services tailored to the needs of most use cases. Developers can conveniently select the service type they require, leverage the capabilities of Platform Engineering to instantiate the service, obtain the service's address, and promptly commence utilization.

Platform Engineering accomplishes this by abstracting the service definitions and introduces the notion of applications layered atop these services. Multiple applications can be integrated to construct a holistic business scenario, complete with explicit or implicit relationships interlinking them. Through these relationships, they naturally coalesce to forge a comprehensive business ecosystem, extending their services to external entities.

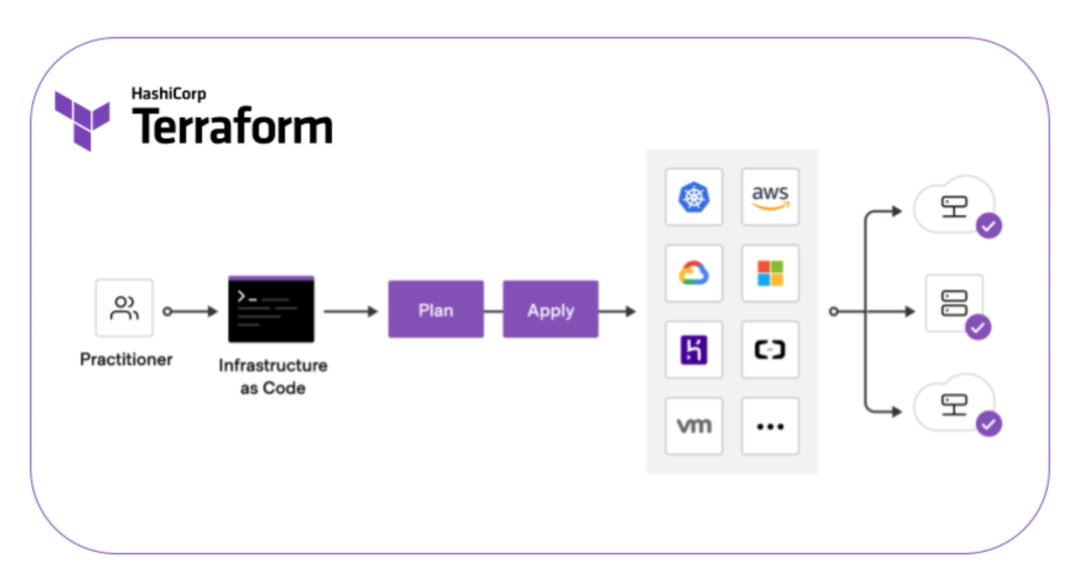

What is Terraform

Terraform is a potent Infrastructure as Code (IaC) tool designed to facilitate the secure, efficient construction, alteration, and upkeep of infrastructure. This encompasses not only fundamental components like computing instances, storage, and networking but also higher-level elements such as DNS entries and Software as a Service (SaaS) functionalities.

In the era before Terraform's advent, infrastructure management was a labor-intensive, error-prone, and intricate endeavor that heavily relied on manual operations. However, Terraform has revolutionized infrastructure management by making it more straightforward, efficient, and dependable. It particularly shines in cloud environments, including AWS, GCP, Azure, Alibaba Cloud, and others. Terraform's versatility is attributed to its comprehensive providers, which function akin to plugins, facilitating seamless scalability.

Terraform harnesses the HashiCorp Configuration Language (HCL) to orchestrate and uphold infrastructure resources. Before execution, the terrform plan command allows you to preview resource modifications. These alterations are tracked through Terraform's state files.

The concept of Terraform State plays a pivotal role in monitoring the current status and configuration of your infrastructure. When deploying infrastructure with Terraform, it diligently monitors the created resources and their configuration states. This information is stored either in a local .tfstate file or managed remotely via storage solutions like AWS S3 or Azure Blob Storage.

The state file serves as a ledger of resource statuses, and as resources evolve, so do their configuration states. Consequently, Terraform provides the capability to preview these resource transformations before enacting them. Terraform's configuration state file can either be stored locally or within remote storage solutions such as S3, Consul, GCS, Kubernetes, or other customized HTTP backends.

Installing Terraform

macOS

brew tap hashicorp/tap brew install hashicorp/tap/terraform

Linux Ubuntu

wget -O- https://apt.releases.hashicorp.com/gpg | sudo gpg --dearmor -o /usr/share/keyrings/hashicorp-archive-keyring.gpg echo "deb [signed-by=/usr/share/keyrings/hashicorp-archive-keyring.gpg] https://apt.releases.hashicorp.com $(lsb_release -cs) main" | sudo tee /etc/apt/sources.list.d/hashicorp.list sudo apt update && sudo apt install terraform

Linux CentOS

sudo yum install -y yum-utils sudo yum-config-manager --add-repo https://rpm.releases.hashicorp.com/RHEL/hashicorp.repo sudo yum -y install terraform

Linux binary installation

Linux users can also install Terraform using binary, by downloading the corresponding version on the official website.

Install Terraform version 1.4.6 via binary:

curl -sfL https://releases.hashicorp.com/terraform/1.4.6/terraform_1.4.6_linux_amd64.zip -o /tmp/terraform.zip unzip /tmp/terraform.zip -d /usr/bin/ rm -f /tmp/terraform.zip

Windows

Windows users can install Terraform using Chocolatey, which is a package manager for Windows, similar to Linux's yum or apt-get. Chocolatey enables you to quickly install and uninstall software, making it a convenient way to manage software packages on Windows.

choco install terraform

As an alternative approach, you have the option to download the relevant Terraform version directly from the official Terraform website, extract the files, and configure the PATH environment variable accordingly. To ensure that the installation was successful, you can then confirm it by executing the terraform version command. This method grants users manual control over the installation procedure and is well-suited for those who prefer not to rely on package managers like Chocolatey.

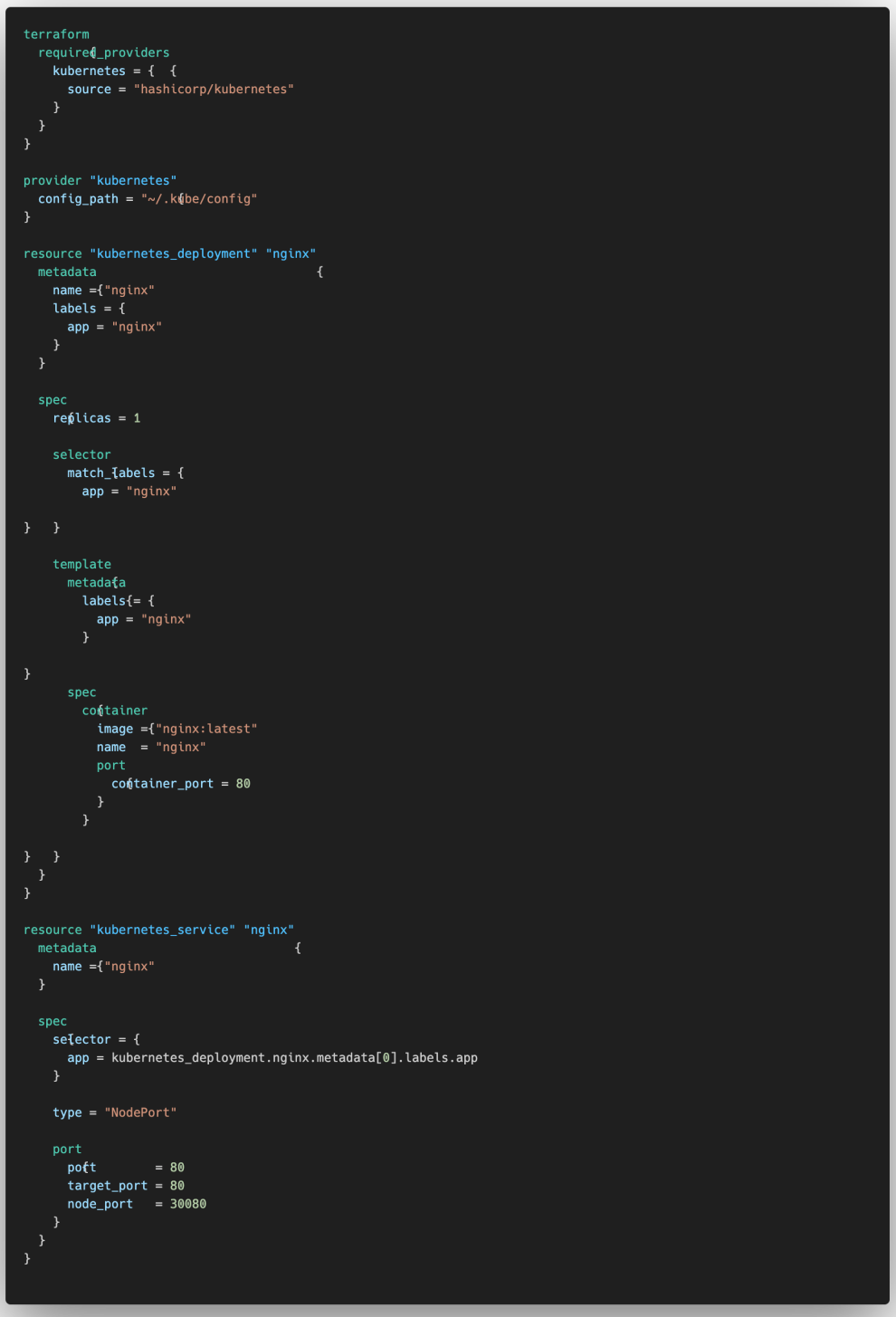

Example of Terraform Managing Kubernetes Resources

Configuring the Kubernetes Provider, you can manage Kubernetes resources using the ~/.kube/config file or other kubeconfig files of your choice.

provider "kubernetes" {

config_path = "~/.kube/config"

}Create a Kubernetes Deployment.

resource "kubernetes_deployment" "nginx" {

metadata {

name = "nginx"

labels = {

app = "nginx"

}

}

spec {

replicas = 1

selector {

match_labels = {

app = "nginx"

}

}

template {

metadata {

labels = {

app = "nginx"

}

}

spec {

container {

image = "nginx:latest"

name = "nginx"

port {

container_port = 80

}

}

}

}

}

}Kubernetes Deployment Service:

resource "kubernetes_service" "nginx" {

metadata {

name = "nginx"

}

spec {

selector = {

app = kubernetes_deployment.nginx.metadata[0].labels.app

}

type = "NodePort"

port {

port = 80

target_port = 80

node_port = 30080

}

}

}Copy the configuration above and place it in the target directory such as ~/terraform-demo.

mkdir ~/terraform-demo && cd ~/terraform-demo

Create a main.tf file, copy the configuration to main.tf and save it. The complete file configuration is as follows:

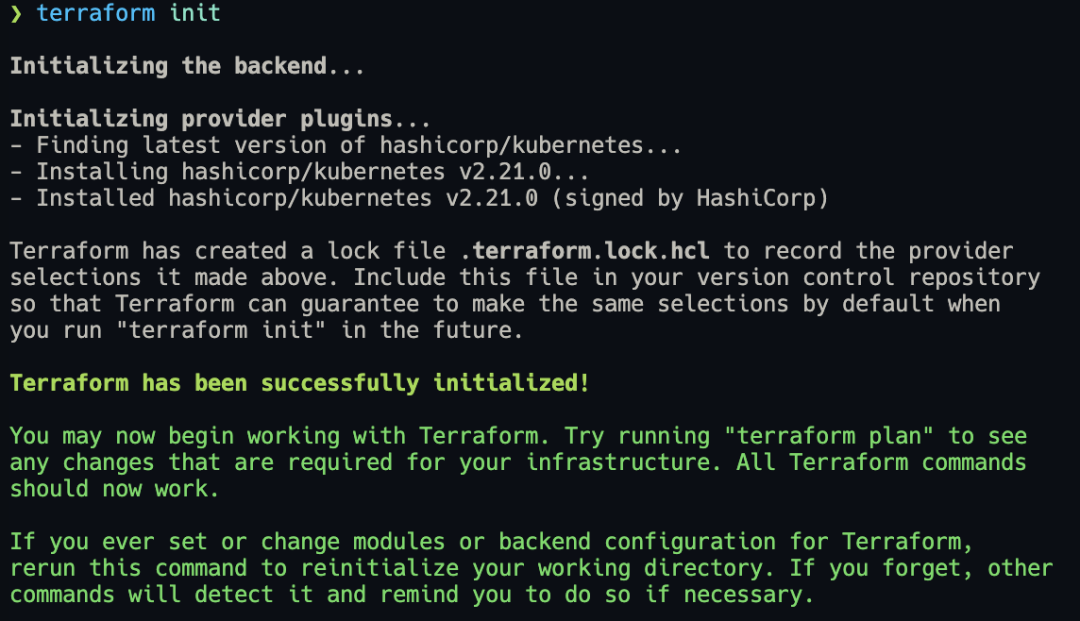

Open the terminal, enter the target directory, and initialize the Terraform environment.

cd ~/terraform-demo && terraform init

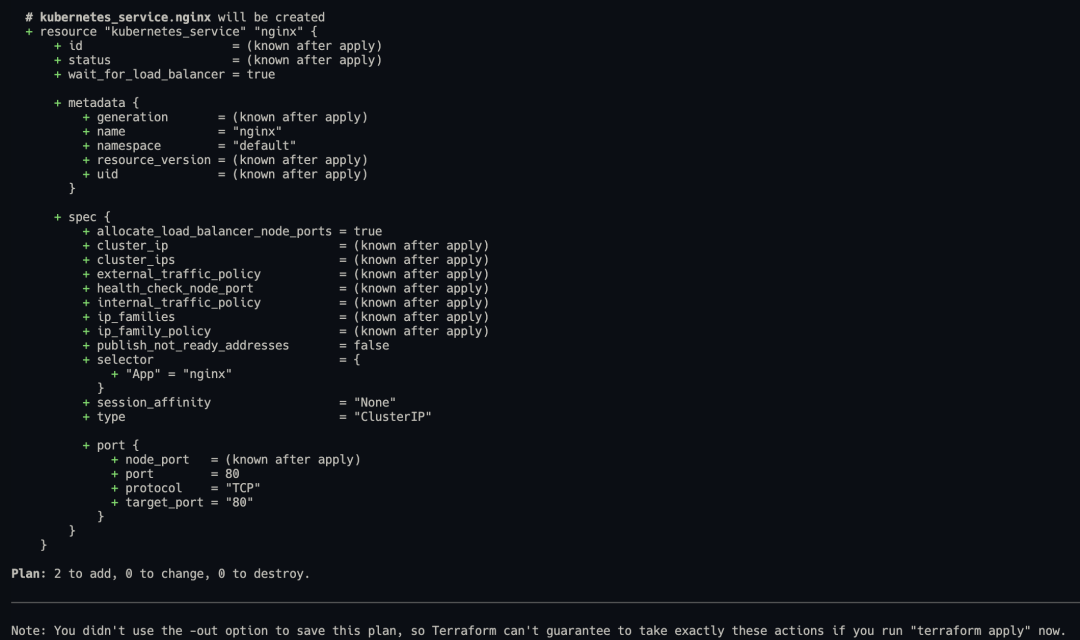

Run terraform plan to preview resource changes.

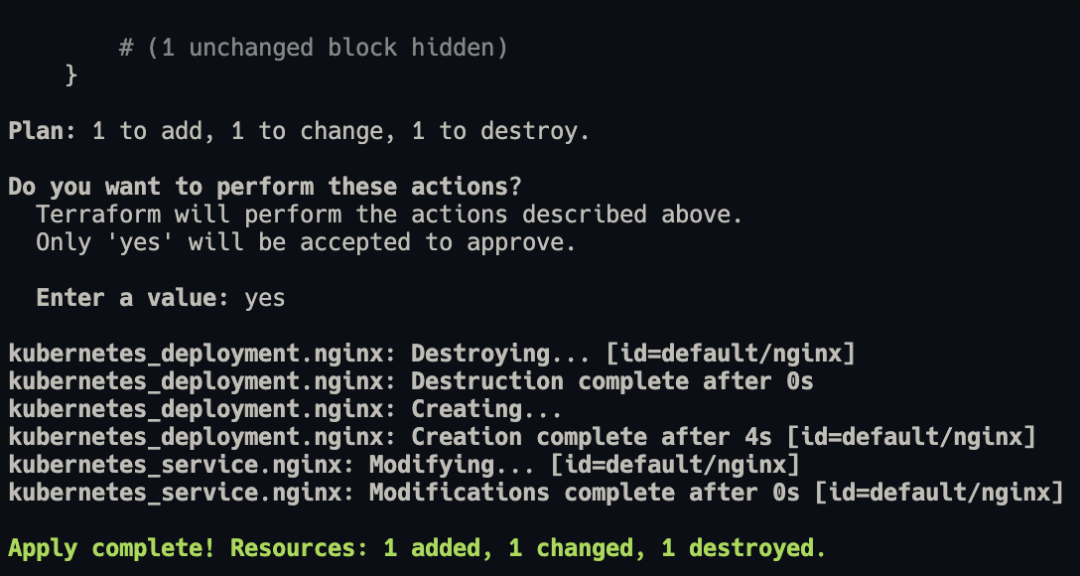

Run terraform apply to create resources.

Finally, you can access the nginx welcome page by opening your web browser and navigating to http://localhost:30080.

If you want to delete resources, you can run terraform destroy command to delete resources.

Regarding the examples mentioned above, we can see that it is easy to manage Kubernetes resources with Terraform. Users just describe the resource configuration in HCL language, then create resources by running terraform apply Moreover, Terraform also supports importing existing resources by running terraform import, so that existing resources can be incorporated into Terraform for management.

Terraform Status Management

Terraform manages the state of resources and determines whether they have been created, modified, or need to be deleted through its state management mechanism.

Every time Terraform performs an infrastructure change operation, it records the state information in a state file. By default, this state file is named terraform.tfstate and is saved in the current working directory. Terraform relies on the state file to decide how to apply changes to resources.

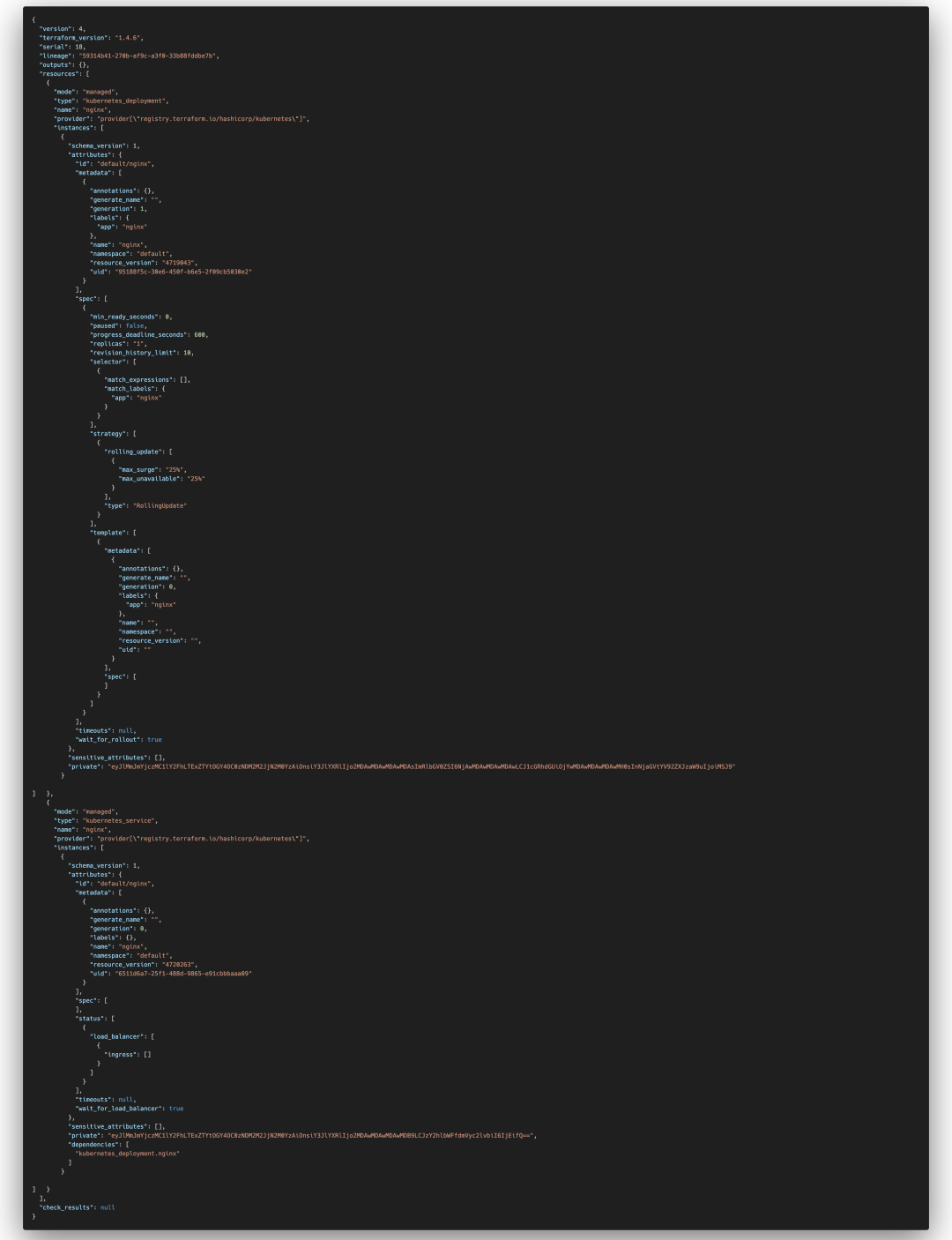

In the example mentioned earlier, you can see that the generated state file contains the resources created, such as kubernetes_deployment and kubernetes_service. These correspond to the resources defined in our HCL files. Both resource and data resources we create are recorded in this file.

The state file also includes metadata information, such as resource IDs and attribute values. This metadata is crucial for Terraform to manage resources effectively.

Terraform's choice of managing resources through state files rather than using API calls to inspect resource status directly from cloud providers is based on several advantages:

Efficiency and Performance: Terraform can directly access the state file to retrieve the current state of created cloud resources. For small-scale infrastructures, Terraform can query and synchronize the latest attributes of all resources, comparing them to the configuration in each apply operation. If they match, no action is needed; if they don't, Terraform creates, updates, or deletes resources accordingly. This approach reduces the need for time-consuming API calls, especially for large infrastructures where querying each resource individually can be slow. Many cloud providers also have API rate limits, which Terraform can encounter if it attempts to query a large number of resources in a short time. Managing state through a state file significantly improves performance.

Resource Dependency Management: Terraform records resource dependencies within the state file. This allows Terraform to manage the order in which resources are created or destroyed based on their dependencies. It ensures that resources are provisioned in the correct sequence.

Collaboration: Remote backends can manage state files, facilitating collaboration among team members. By storing the state remotely (e.g., in AWS S3, GCS, HTTP), multiple team members can work together on the same infrastructure project and share the state file.

While managing resources through state files offers many benefits, it has some potential drawbacks:

State File Security: The state file contains sensitive information in plain text. If this file is leaked or compromised, it could expose critical information, including passwords and access keys, posing a significant security risk. Care must be taken to protect this file from unauthorized access.

State File Reliability: If the state file becomes corrupted or is lost due to factors like hardware failure, it can result in resource information being lost. This can lead to resource leaks and difficulties in resource management.

To address these concerns, Terraform provides a solution through remote backends, where the state file is stored in a remote, secure location. This approach mitigates the risk of state file exposure and ensures better collaboration among team members.

Challenges in Using Terraform

The example provided above demonstrates that Terraform makes managing Kubernetes resources relatively simple. However, as previously mentioned, Terraform does come with some challenges that can impact the user experience in resource management. These challenges include:

HCL Language: Using HCL (HashiCorp Configuration Language) to describe resource configurations means that developers need to learn a new language. Additionally, if you want to use Terraform to manage resources in other platforms such as AWS, GCP, etc., you'll need to learn the configurations for those providers, increasing the learning curve. Syntax issues and complexity can also add to the difficulty.

State Management: Terraform manages resources through state files, and the information stored in these state files is in plain text. If the state file is leaked or compromised, it poses a significant risk to resource management and security.

Knowledge and Experience: Infrastructure resource managers need to have knowledge and experience with the resources they are configuring. Otherwise, misconfigurations may lead to resource creation failures or configurations that do not meet expectations.

Configuration Overhead: Managing a large number of resources often requires writing a significant amount of HCL files. Resource users may spend considerable time searching for resources and configuration files, increasing the management overhead.

Real-time Resource Status: Resource status, such as Kubernetes resource states, viewing logs, and executing commands in a terminal, may require other methods of management, as Terraform does not provide real-time access to these aspects.

Despite these challenges, Terraform remains a powerful tool for infrastructure management. Mitigating these issues often involves careful planning, adhering to best practices, and leveraging additional tools and processes to complement Terraform's capabilities.

Using Walrus to Simplify Infrastructure Management

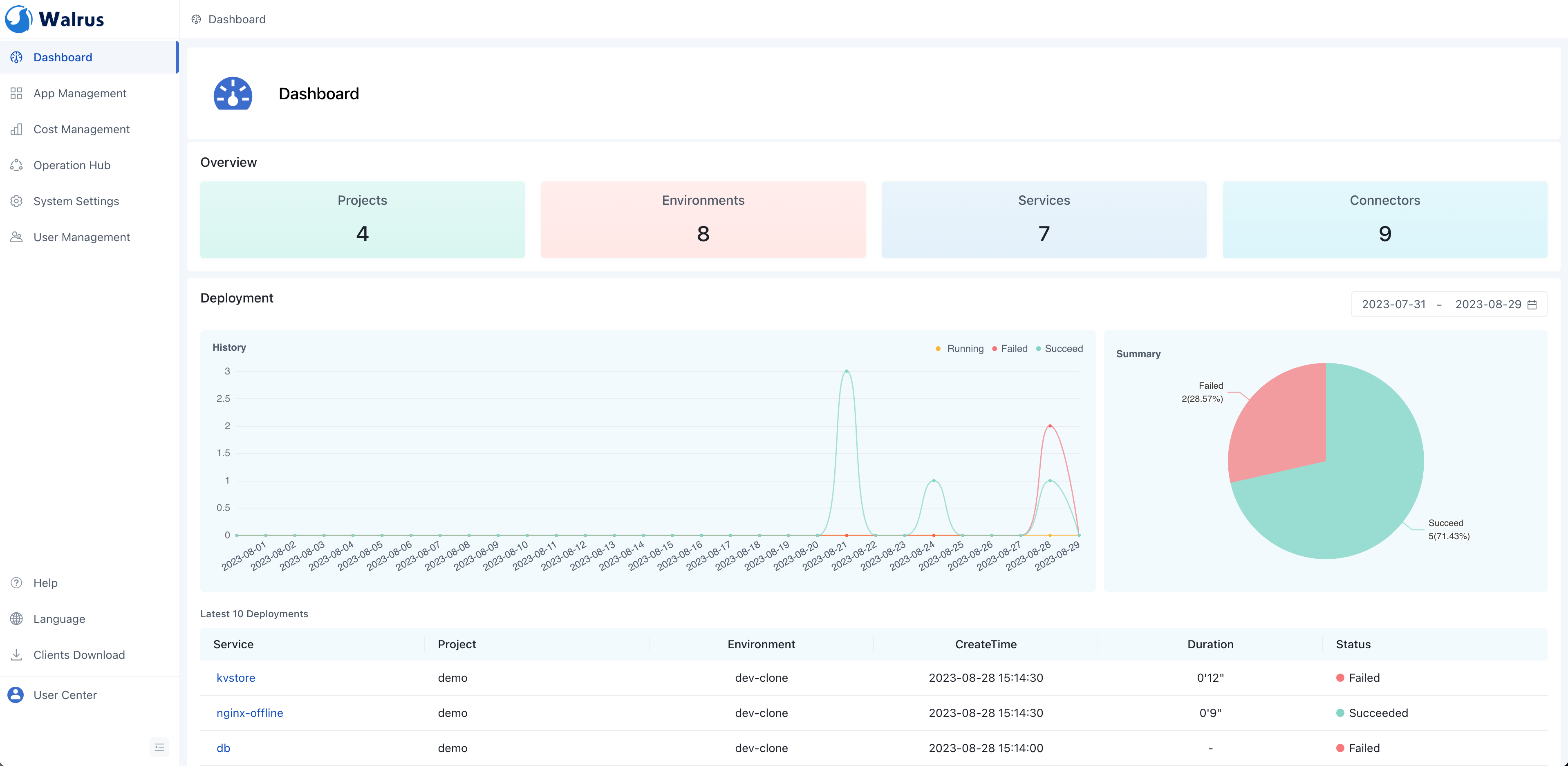

Walrus is an application deployment and management platform based on the concept of platform engineering, built on Terraform technology at its core. It enables developers and operations teams to rapidly set up production or test environments and efficiently manage them. Walrus leverages the capabilities of platform engineering to address the previously mentioned challenges.

Walrus abstracts resources into services and uses applications to control these services, separating the underlying resource configurations from their actual usage. This simplifies infrastructure management.

By managing multiple environments and configurations, it ensures consistency across development, testing, and production environments, reducing the risk of errors and inconsistencies, and ensuring that applications run accurately at all times.

With governance and control features provided by the platform, developers can also ensure that the environments they use are secure and compliant with best practices and security standards. Resource users can focus on using resources without concerning themselves with the underlying details and configurations.

Through the defined resource templates, developers no longer need to worry about the syntax of HCL language, how to configure Terraform provider parameters, or the underlying implementation details of the infrastructure. They can simply use the resources through the platform's UI by filling in the parameters of the predefined modules. This significantly reduces the complexity of resource usage for developers and improves overall development efficiency.

Additionally, the inconveniences of Terraform's state management have been addressed. Walrus stores state files remotely in an HTTP backend, mitigating the risk of state file exposure. Different services automatically manage their respective states, allowing team members to use the backend to solve state storage and sharing issues.

Walrus defines a series of abstractions at the upper level of resources to simplify the management of application services. It manages the lifecycle of business services through concepts like Project, Environment, Connector, Service, and Resource.

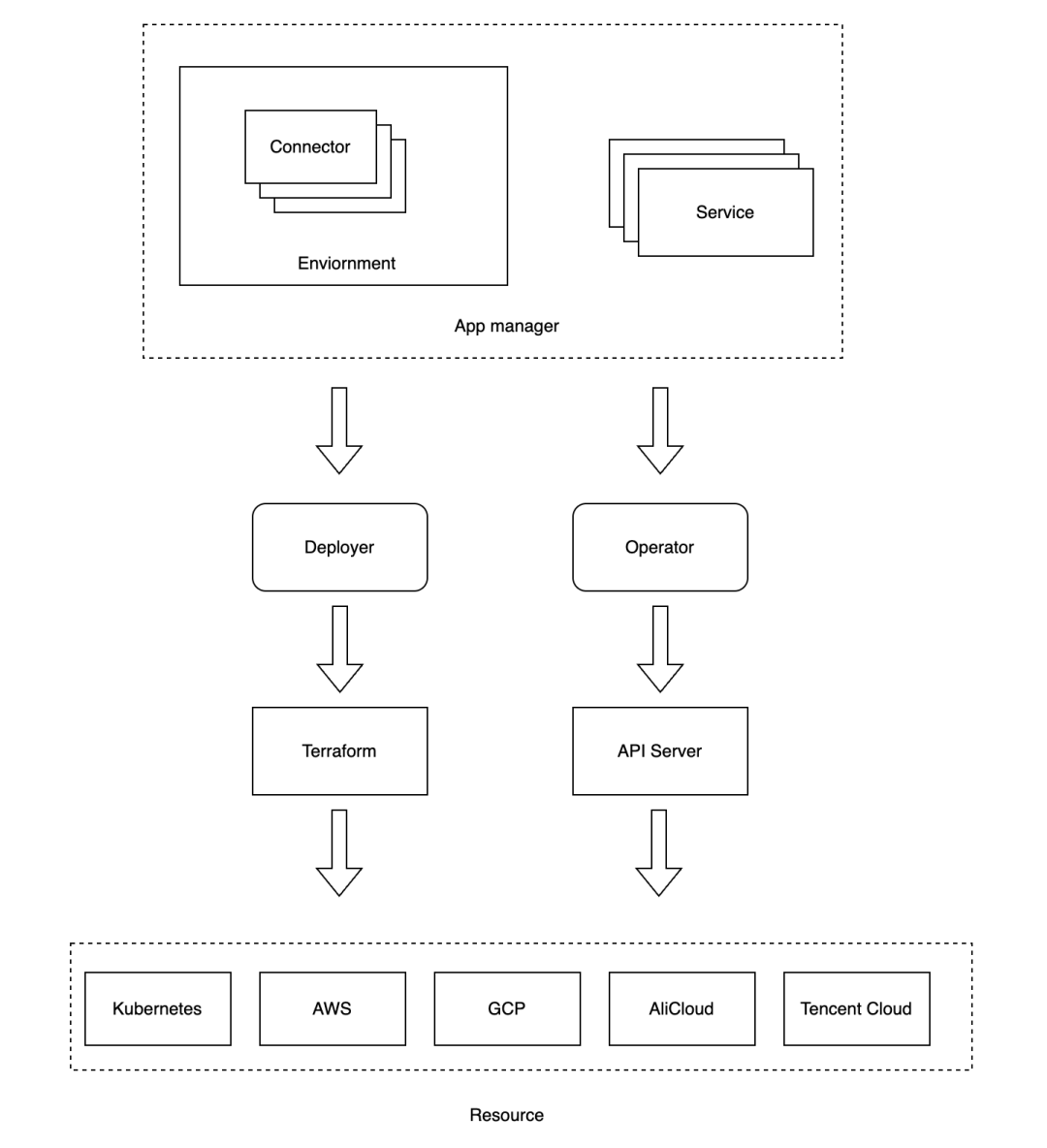

Internally, it primarily uses three major components: App Manager, Deployer, and Operator to manage resources. App Manager is responsible for resource management, Deployer handles resource deployment, and Operator manages resource states. Before managing and deploying application resources, connectors need to be created first. Connectors are used to connect to resource providers such as Kubernetes, AWS, Alibaba Cloud, and custom connectors can also be defined to manage different cloud resources.

App Manager manages the lifecycle of services, including creating, updating, and deleting services, as well as controlling the versions of application instances, including creating, updating, and deleting application instance versions.

Deployer can automatically generate resource configuration files based on the application's configuration and deploy resources using Terraform. Deployer identifies the Provider defined in templates and generates resource configuration files based on the Connector configuration in the environment. Deployer enables resource deployment, creation, updates, deletion, rollback, and more.

Operator manages resource states, including viewing resource states, logs, and executing terminal commands. Operator defines a series of resource operations using different resource types and the corresponding service provider's API server to perform resource operations. For example, for Kubernetes resource operations, Operator uses the Kubernetes API Server to view resource states, logs, execute terminal commands, and more. Currently, Walrus supports operations for Kubernetes, AWS, and Alibaba Cloud resources, with support for more cloud providers' resource operations planned for the future.

How to deploy with Walrus

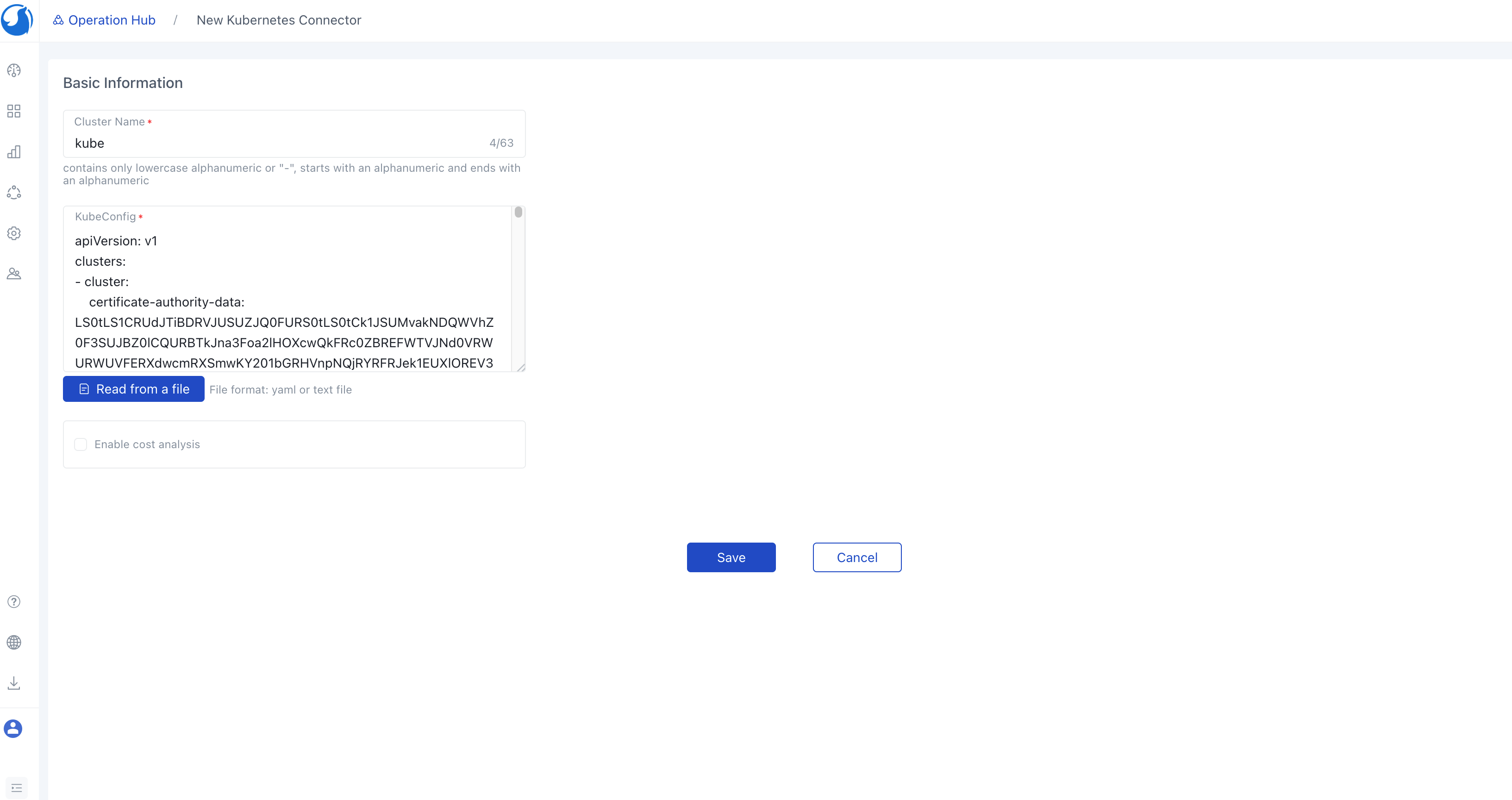

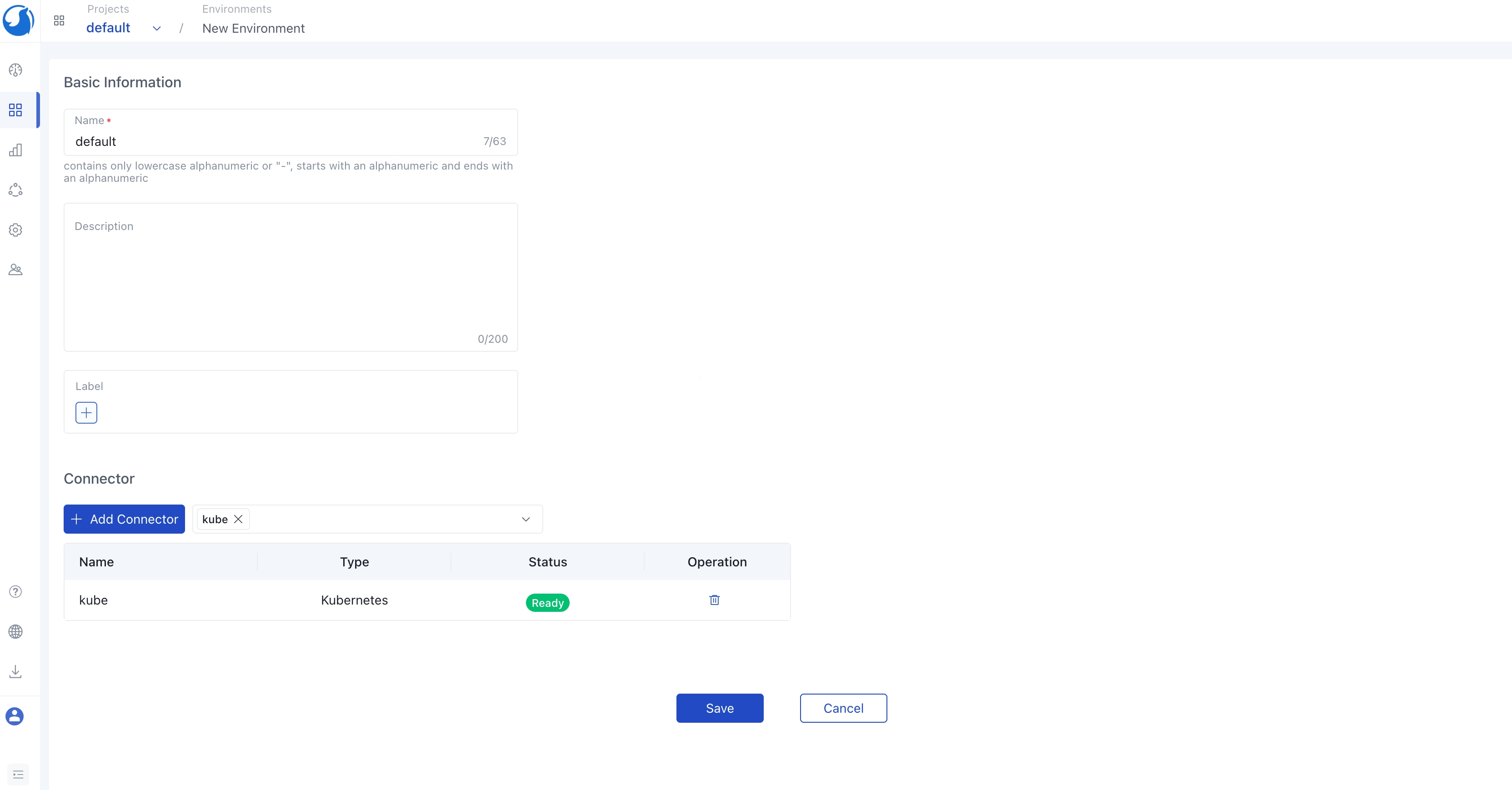

Before creating a service, you need to create a connector. You can create a Kubernetes connector and assign this connector to the default environment.

In this case, select the default project, create a default environment in the default project, and then create K8s-related services in the environment. You can also create other types of connectors such as AWS, AliCloud or whatever is related to your cloud services. Walrus also supports multiple environments . You can create different environments according to the actual needs, such as dev, prod, etc., in different environments to manage various resources.

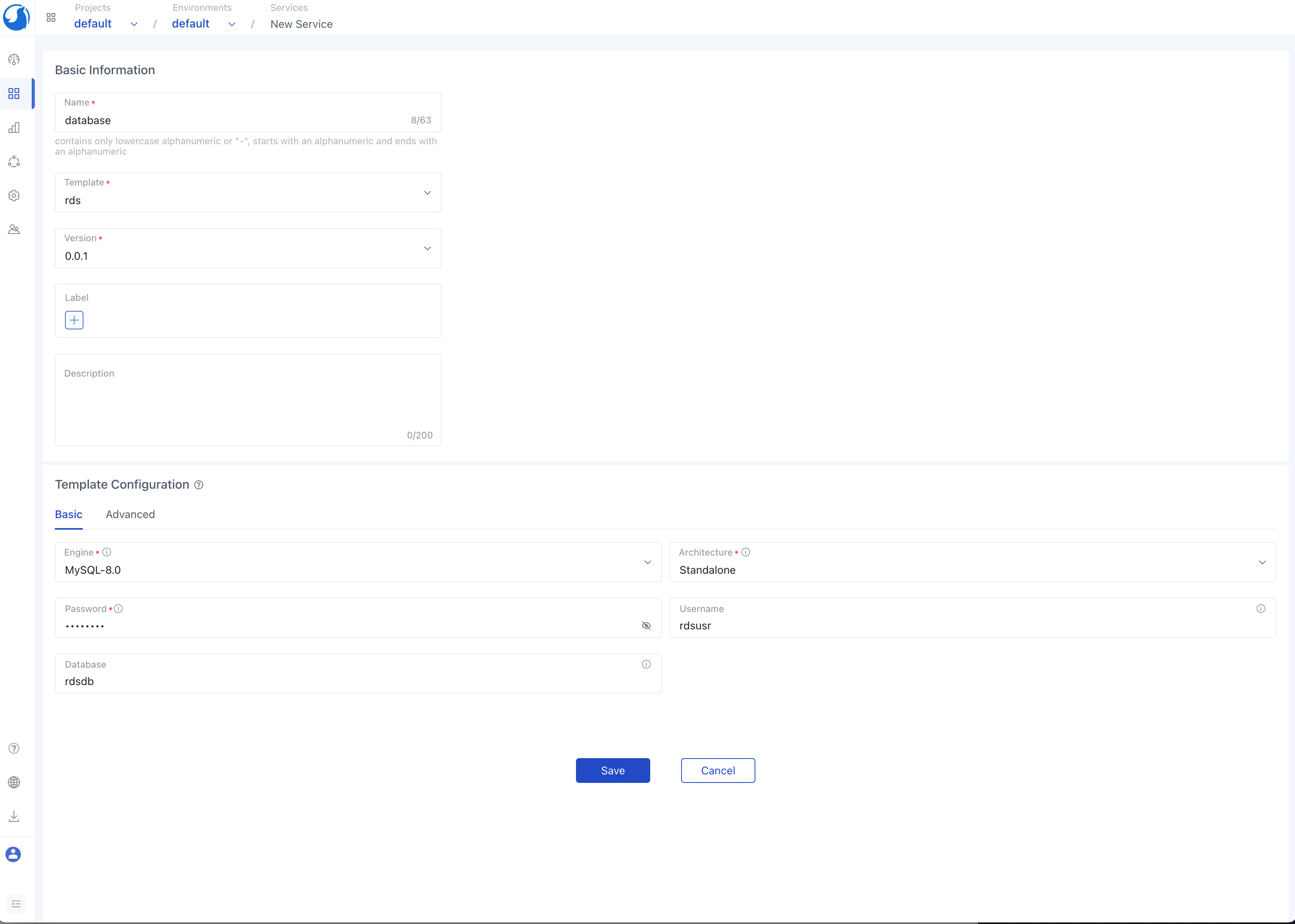

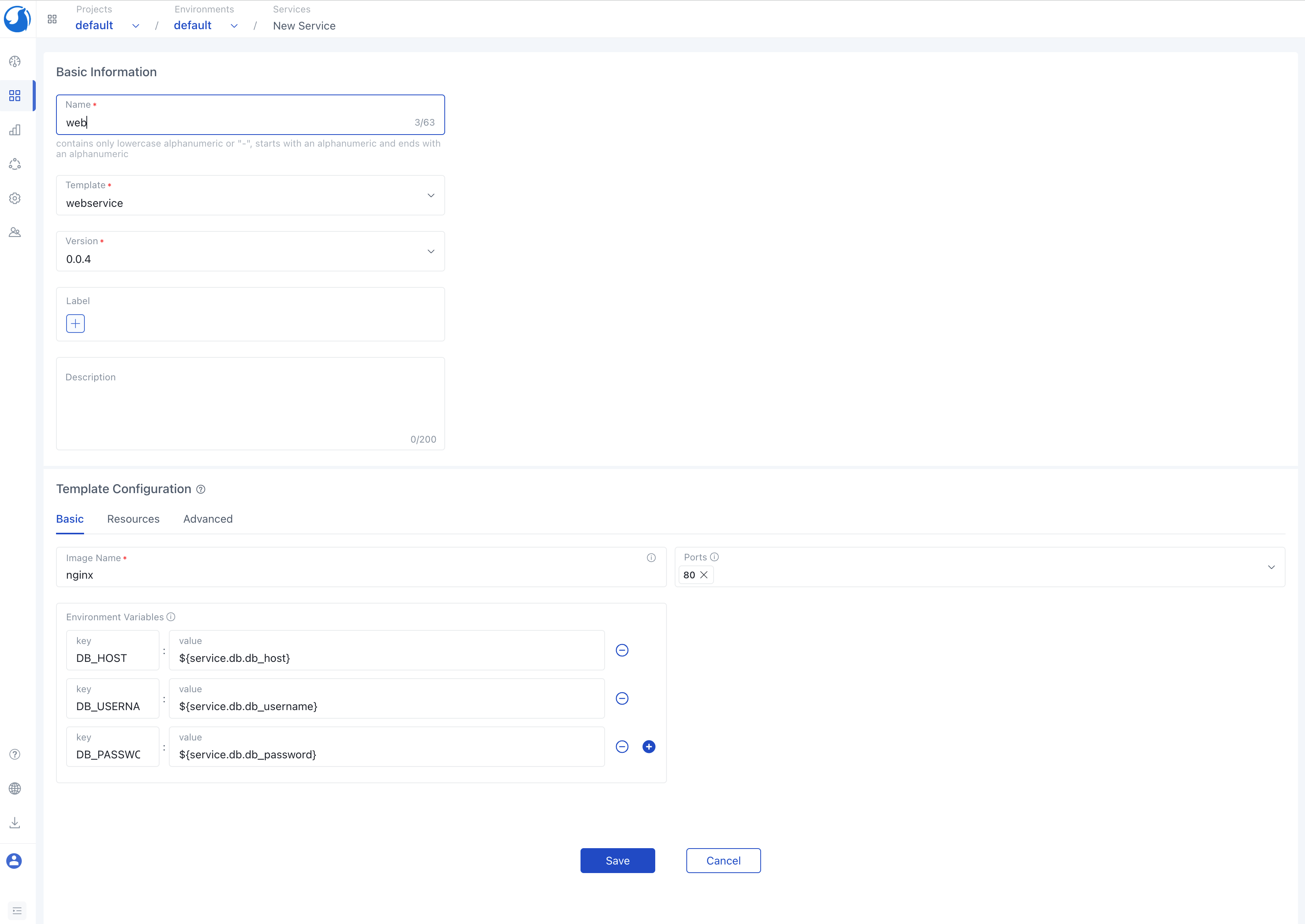

After completing the environment configuration, the next step is to create services. You have the option to choose a module for your service from the system's pre-built templates, or you can customize your own template to tailor the service to your specific needs within the module management section. When creating a service, you'll need to select the module, specify the service version, and configure the template parameters to suit your service.

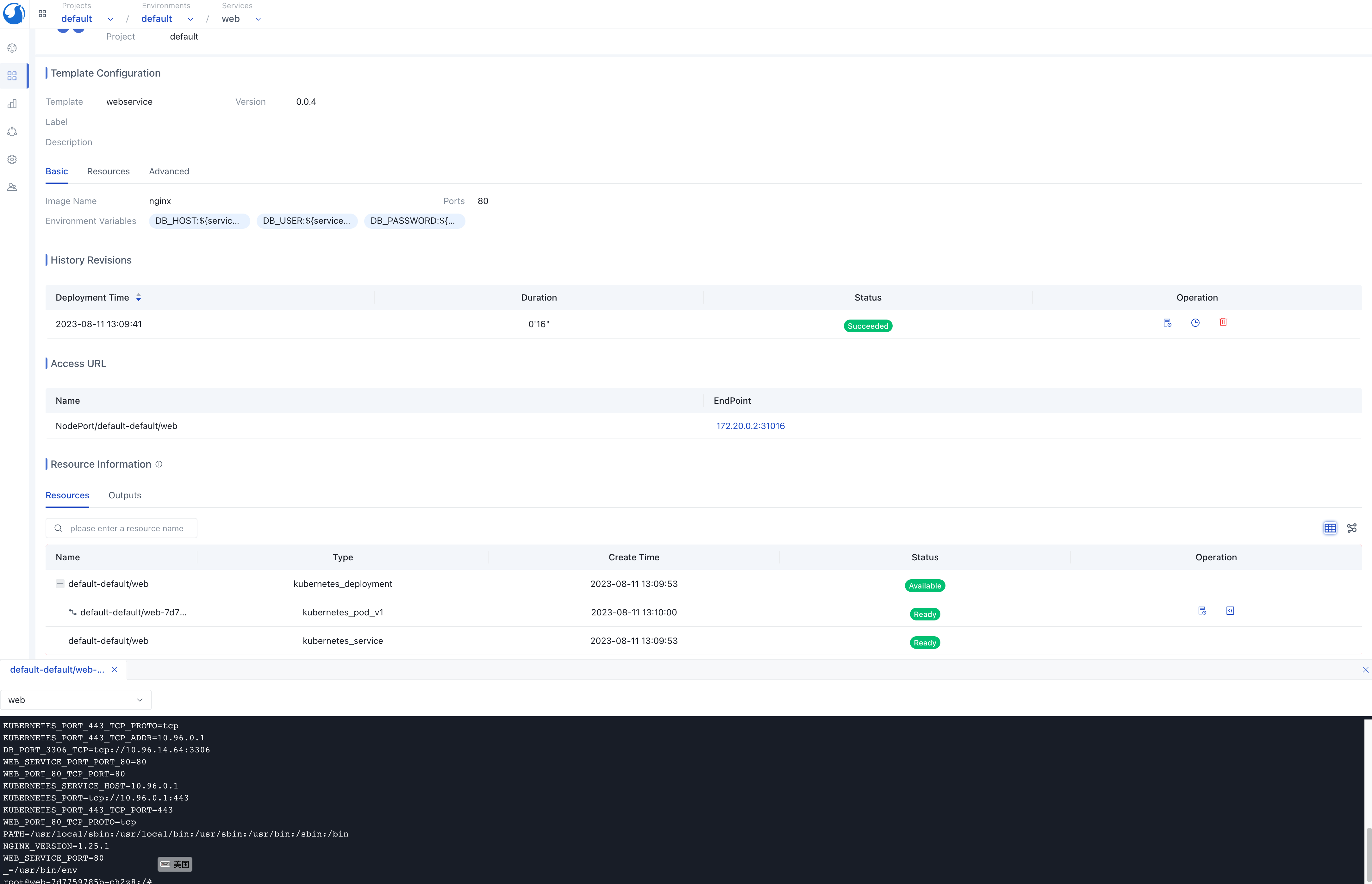

Below is a screenshot illustrating the creation of a web application using the pre-existing webservice and mysql modules.

To create a database service in the default environment, simply input the module configuration details and save your settings.

Once the database service creation is complete, proceed to create a web service within the default environment. During this process, you have the opportunity to inject the information of the database service into the environment variable of the web service. This is achieved by configuring environment variables, allowing the program to seamlessly access the database service through these environment variables.

The created instance will automatically deploy resources by Deployer based on the application configuration management. After the deployment is completed, the Operator will automatically synchronize the status of resources, and other operations such as viewing logs and executing terminals can be conveniently performed on the instance details page.

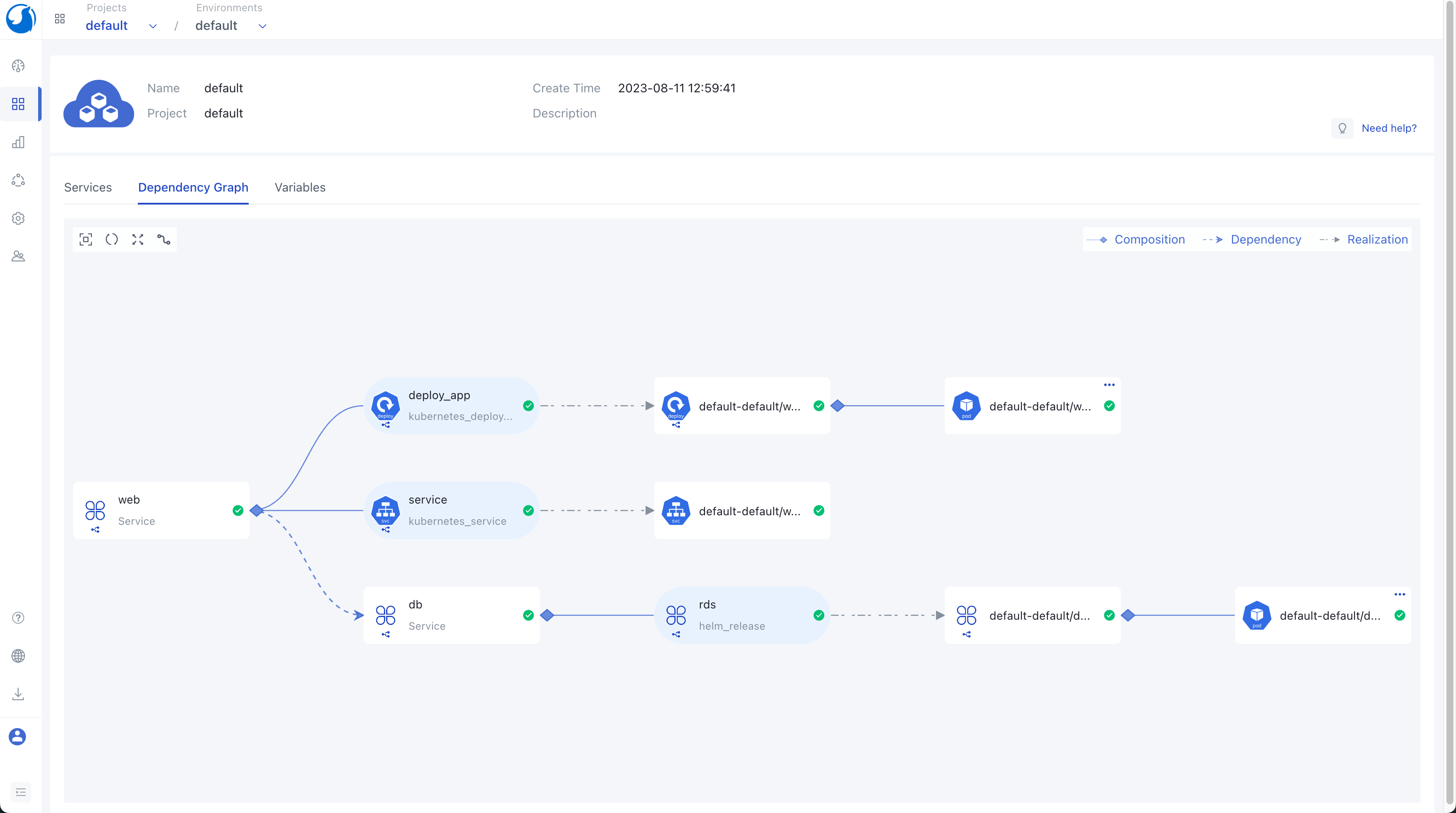

Resources within an environment can be viewed through the service list page, and Walrus provides service-level and environment-level dependency diagrams. These top-level environment views help developers better understand the business architecture. In the dependency diagram, you can clearly see the dependencies between different services and the resources they deploy. You can also access logs and terminals on the dependency diagram, making it easier for developers to view service logs and execute business-related commands, ultimately improving development efficiency.

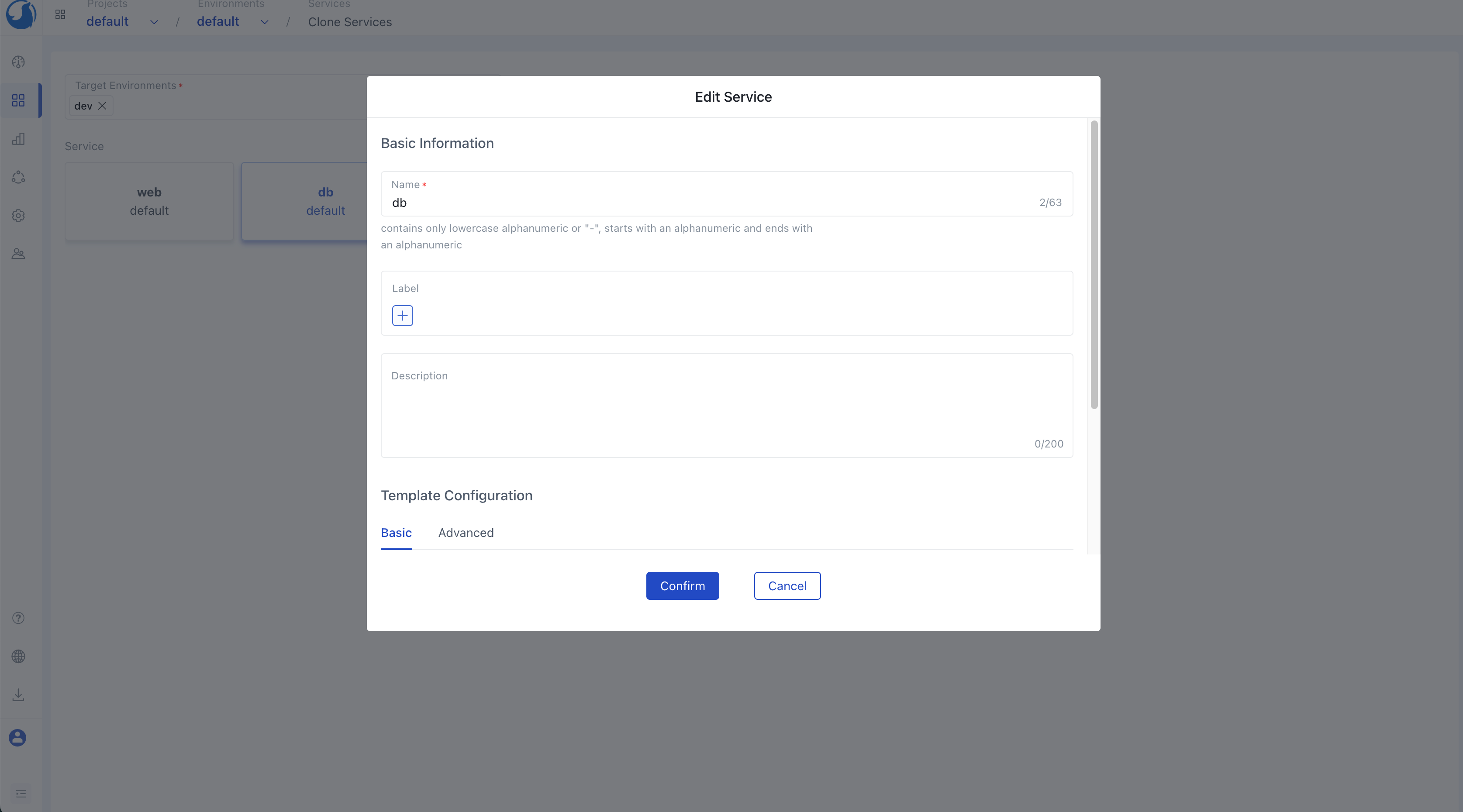

Walrus also offers support for rapid service creation, including the bulk cloning of services into different environments. Building upon the previous instance, we can easily establish a new dev environment and swiftly replicate both the database and web services to this fresh dev environment. Additionally, we can duplicate their dependency management reservations within this new environment. Bulk cloning provides us with a streamlined process for swiftly generating development or testing environments for other team developers or testers.

Walrus simplifies resource management and system deployment, making it accessible even to developers lacking DevOps experience or quality assurance engineers. With Walrus, resource management and deployment become straightforward tasks.

Moreover, Walrus extends its support to a variety of resource types, including AWS, Google Cloud, and more. You can control the utilization of these resources by defining the appropriate connectors. Simply configure and create the connector within the DevOps center, and you'll have access to corresponding modules like built-in aws-rds and alicloud-rds. When creating a service, just select the relevant module to effortlessly deploy and manage the associated cloud resources.

Walrus also empowers platform engineers with AI capabilities, enabling them to swiftly craft infrastructure modules.

Benefiting from Terraform's robust ecosystem, Walrus boasts the flexibility to accommodate diverse resource types. Platform engineers can define distinct modules within the system, ensuring a consistent resource management and deployment experience across different systems.

GitHub

GitHub