GPUStack is an open-source GPU cluster manager for running large language models (LLMs). It enables you to create a unified cluster from GPUs across various platforms, including Apple MacBooks, Windows PCs, and Linux servers. Administrators can deploy LLMs from popular repositories like Hugging Face, allowing developers to access these models as easily as they would access public LLM services from providers such as OpenAI or Microsoft Azure.

To meet user requirements quickly, GPUStack is continuously improving. Since its launch at the end of July, GPUStack has been well-received by the community and has generated substantial feedback. On September 14th, we quickly released GPUStack 0.2, introducing distributed inference, CPU inference, and various flexible scheduling strategies. Just two weeks later, we’re excited to announce the significant release of GPUStack 0.3.

This version introduces significant features, including vLLM support, custom backend parameter tuning, multimodal vision language models and multi-model comparison view in playground. Additionally, OS support has been expanded, and several community-reported issues have been addressed to better accommodate diverse use cases.

For more information about GPUStack, visit:

GitHub repo: https://github.com/gpustack/gpustack

User guide: https://docs.gpustack.ai

Key features

vLLM support

The first backend supported by GPUStack is llama.cpp, a flexible and multi-platform backend compatible with Linux, Windows, and macOS. It supports various GPU and CPU environments. The flexibility and compatibility of llama.cpp make it an ideal choice for resource-constrained scenarios, serving as a leading solution for LLM inference in AI PCs, edge devices, consumer-grade hardware, and other resource-limited environments.

In contrast, vLLM is another backend tailored for production environments, optimized specifically for inference in large-scale deployments. With its superior throughput and efficient memory management, vLLM outperforms llama.cpp in scenarios that require higher concurrency and performance. Although vLLM is limited to Linux systems, it is widely adopted in data centers due to its production-grade inference capabilities.

To better support production scenarios, GPUStack introduced support for the vLLM backend in version 0.3. This update also supports non-GGUF model formats through vLLM. Now, GPUStack automatically selects the appropriate backend based on the model format during deployment. If the model is in GGUF format, GPUStack uses llama.cpp as the backend. For non-GGUF models, GPUStack switches to vLLM to handle the deployment.

Adding vLLM support allows GPUStack to cover a broader range of use cases, from research and testing environments to production deployments, spanning data centers, cloud environments, desktops and edge devices.

Custom backend tuning

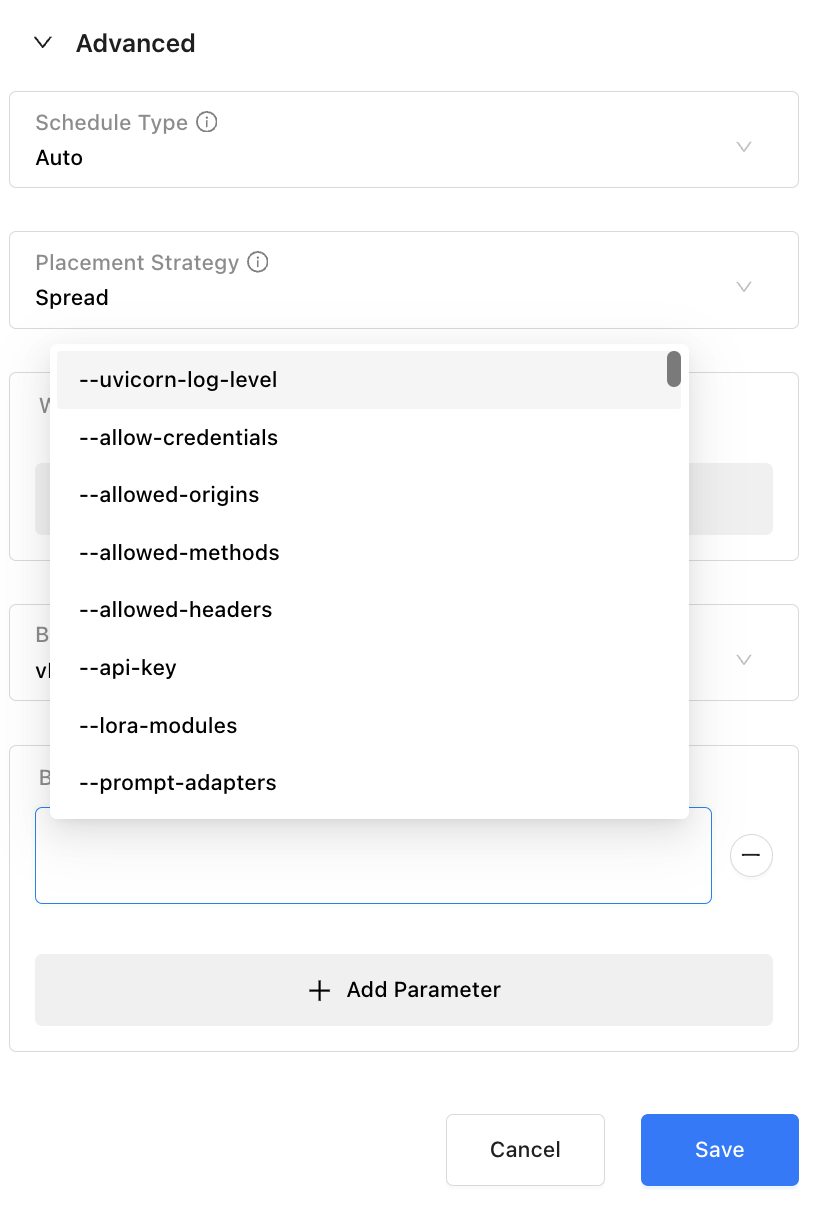

In various specific application scenarios, there may be custom advanced parameter requirements for model throughput, context size, and memory optimization. When deploying various models, it is essential to customize and tune the backend parameters to align with best practice configurations for different contexts. GPUStack 0.3 supports customizing backend parameters, for both llama.cpp and vLLM.

When deploying models, GPUStack allows administrators to set backend parameters. It automatically lists these parameters for administrators to choose from and configure, ensuring a great experience.

VLM support

VLMs (Vision Language Models) integrate visual and textual information, enabling the simultaneous processing of images and text for tasks such as image recognition. Thanks to the rapid advancements in multimodal technologies, multimodal models have shown immense potential in various fields, such as autonomous driving and medical imaging analysis, and are a key focus for the future of LLMs.

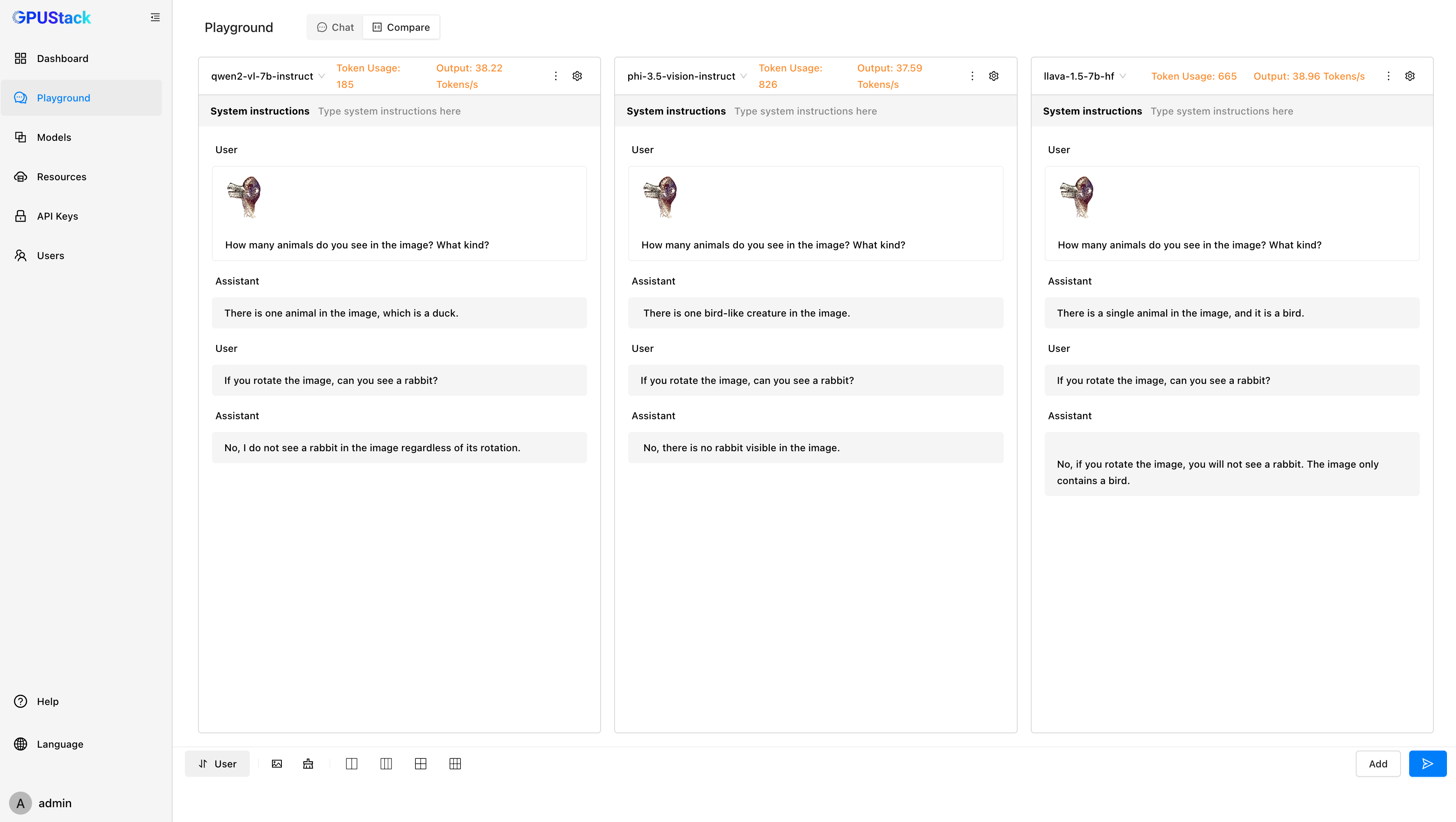

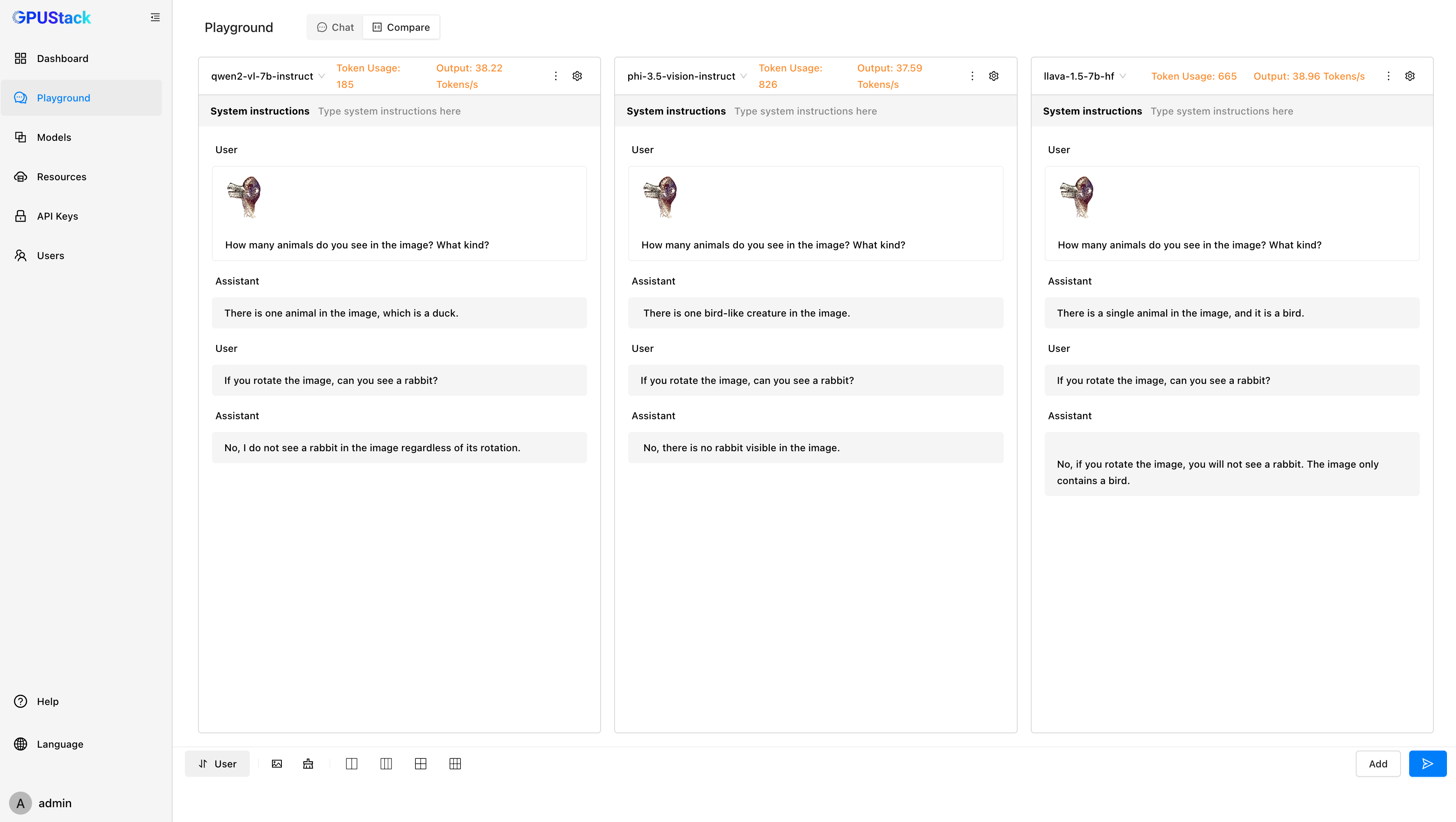

With the release of GPUStack 0.3, we have added support for multimodal VLMs. Administrators can deploy vision language models from the model repository and test VLMs in the playground. The newly introduced multi-model comparison view allows for the simultaneous evaluation of multiple VLMs’ performance, and VLMs can be accessed through an OpenAI-compatible API.

Note: Currently, only the vLLM backend supports VLM.

Multi-Model comparison view

The Playground in GPUStack 0.3 now supports a multi-model comparison view. This feature includes comparisons of both response content and performance metrics, providing administrators with a more intuitive model evaluation experience. GPUStack supports, but is not limited to, the following comparison dimensions:

- Compare models from different providers, such as Qwen 2.5 7B and Llama 3.1 8B.

- Compare different model weights, like Qwen 2.5’s 1.5B and 3B.

- Compare different prompt parameters, such as temperature 1.0 vs. temperature 0.6.

- Compare different quantization methods, like Q8_0 vs. Q4_0.

- Compare different GPUs, such as the RTX 4090 vs. the A100.

- Compare different backends, such as llama.cpp vs. vLLM.

ModelScope support

GPUStack currently supports models from Hugging Face and Ollama Registry. With the release of GPUStack 0.3, it also supports ModelScope, providing a smoother deployment experience for users in China and meeting enterprises' and developers' diverse model sourcing requirements.

Expanded OS support

GPUStack 0.3 now supports more Operating Systems, such as Ubuntu 20.04 and RHEL 8. GPUStack aims to fully support various devices across amd64 (x86_64) and arm64 platforms on Linux, Windows, and macOS. A list of verified OS is below:

macOS

amd64

arm64

Windows

amd64

Linux

amd64

arm64

| Distributions | Versions |

|---|---|

| Ubuntu | >= 20.04 |

| Debian | >= 11 |

| RHEL | >= 8 |

| Rocky | >= 8 |

| Fedora | >= 36 |

| OpenSUSE | >= 15.3 (leap) |

| OpenEuler | >= 22.03 |

We are continuously expanding GPUStack’s support, with ongoing work planned for platforms such as Windows arm64.

GPUStack currently requires Python >= 3.10. If your Python version is below 3.10, we recommended using Miniconda to create a Python environment that meets the version requirements.

For other enhancements and bug fixes, see the full changelog:

https://github.com/gpustack/gpustack/releases/tag/0.3.0

Join Our Community

For more information about GPUStack, please visit: https://gpustack.ai.

If you encounter any issues or have suggestions, feel free to join our Community for support from the GPUStack team and connect with users from around the world.

We are continuously improving the GPUStack project. Before getting started, we encourage you to follow and star our project on GitHub at gpustack/gpustack to receive updates on future releases. We also welcome contributions to the project.